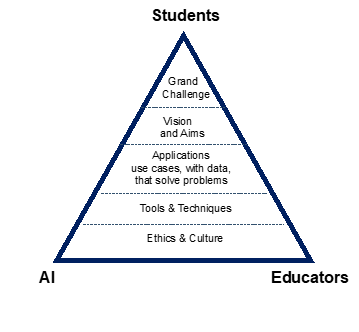

Although not explicitly on all curricula, and not on the strategic roadmap of most institutions, various applications of artificial intelligence (AI), such as natural language processing and machine learning, now play an important role in students’ learning. Generative AI, such as ChatGPT, has propelled the agenda forward with a new pedagogic paradigm, one that firmly includes AI in addition to students and educators. Let’s call it “Pedagogic Paradigm 4.0”. Figure 1 below illustrates this new paradigm. Though seemingly static, it will evolve with new learner-facing and educator-facing AI being developed.

To illustrate the five building blocks within this new pedagogic paradigm, I will use the example of an AI-based tool we developed to provide formative feedback – our AI Essay-Analyst. We built this tool to help students improve their skills in writing essays, blogs, reports, dissertations and exams. I am using AI here as a summary term to encompass deep learning, natural language processing, knowledge graphs in machine learning and so on.

1. Defining the grand challenge or overarching problem(s): For our project we identified several grand challenges and problems that we wanted to overcome. On a macro-level the UN Sustainable Development Goals, specifically SDG 4 and SDG 10, call for quality education and reduced inequality. At the university level, the National Student Survey scores for several years have indicated “assessment feedback provision” as one of the lowest-scored sets of satisfaction. Finally, on a micro level, a survey on perceived barriers to academic and employment success, conducted between 2018 and 2020 with 405 undergraduate students from across two UK business schools, showed that, on average, academic writing was scored by students as their main barrier to success.

- Resource collection: AI and the university

- AI has been trumpeted as our saviour, but it’s complicated

- Resource collection: AI transformers like ChatGPT are here, so what next?

2. Vision and aims: The University of Warwick is committed to ensuring that students have equal opportunities to thrive and progress during their studies. The aim of any AI-based tool, including ones that provide students with formative feedback, is to empower all students, independent of background, disability, gender, race, sexual orientation and neurodiversity, with equal access to learning. For the last of these, dyslexia in particular, we have received endorsement.

3. Applications and use cases: While some might argue that essays and dissertations are not the most effective route to assess students’ learning, considering the current type of assessments and the future transferability of the feedback tool to other types of exams and to oral assessments, we decided that developing a formative feedback tool was a worthwhile project. Automated essay scoring was first developed in 1966 in the US (as part of “Project Essay Grade”). Advances in natural language processing mean that there are many AI tools that can be used for formative feedback. Among them are Grammarly, which claims to have 30 million users daily, and Quill, being used by around 123,000 teachers in 28,000 schools. Turnitin now also provides writing feedback.

4. Tools and techniques: If readers want to develop something in-house in order to be less dependent on external providers and to be able to actually understand the tools, rather than working with a “black box” environment, then here some of the aspects that we incorporated into our in-house feedback tool:

- We used a mixture of non-AI rule-based statistical features and deep-learning algorithms and databases (such as PyTorch, the Hugging Face framework and Transformer). We developed our codes based on open-source communities, mainly GitHub and pre-trained models, with results converted and interpreted so that the output makes sense to students. We did not use subject-specific labelling or subject-specific supervised learning.

- Our feedback report is structured into comprehension, analysis, critical thinking and academic writing. As expected, the academic writing feedback is the most comprehensive because it includes tools such as the “Flesch Reading Ease Readability Formula” where AI “tells” students whether their writing is too difficult to read, or too easy, and argumentative zoning, which reviews the structure of a piece of writing, informing students about the percentage share of the different sections, such as introduction, methods, results and so on, not by looking at the titles provided by the students, but by analysing each of the sentences. We also provide a list of grammar suggestions and corrected typos. The favourite part for me and most students is the knowledge graph, where the tool shows the relationship between the concepts and ideas they wrote about. To visualise a knowledge graph, think concept maps and cognitive maps. After having written long texts, students find it especially useful to review the knowledge graph. It allows them to check “at one glance” if what they wrote “makes sense”.

- A warning: We spent more than 2,000 hours developing the tool across data-scientists’, project managers’ and researchers’ time, with one researcher focusing solely on AI ethics applied to our tool and our development process. However, if other universities just wanted to focus on one aspect, such as knowledge graphs, it would take only a fraction of the time to develop that aspect.

5. Ethics: When developing your own AI applications or buying tools, it is important to employ rigour and make sure you are doing the “right thing”. In our “Opportunities of AI” working group, we have introduced a “Red Team” that considers ethical decisions. Readers might be interested in the ethical guidelines on the use of AI and data in teaching and learning for educators produced by the EU and Jisc’s summary report of AI in tertiary education. To consider AI ethics in a practical context, including for discussion with students, explore case studies such as Rho AI, a data science firm, and its attempt to leverage artificial intelligence to encourage environmental, social and governance investments.

AI presents risks and challenges, but also offers transformative potential. Successful AI implementations require the right cultural environment. My final advice is to read up on design thinking and lean start-up, including some of the related concepts of digital innovation, such as agility, sprints, adaptability, uncertainty, failing and learning fast, and crowdsourcing as well as the risk of HiPPOs. Going forward, it is important to find the right balance between rigour on the one hand and relevance with mitigated risks on the other hand. This and effective collaboration between universities will ensure that our sector is ideally placed to develop “AI for good”.

Isabel Fischer is an associate professor (reader) of information systems at Warwick Business School, the University of Warwick.

If you found this interesting and want advice and insight from academics and university staff delivered direct to your inbox each week, sign up for the THE Campus newsletter.

comment